Memory limitations in artificial intelligence can cause errors in processing and storing large datasets. This affects the efficiency and accuracy of AI systems.

Memory constraints in AI systems can lead to significant issues. These limitations restrict the amount of data an AI can handle, impacting performance. AI models require extensive datasets for training and operation, but memory shortages hinder this process. This results in slow processing speeds and reduced accuracy.

Memory management techniques are essential to maximize efficiency. Developers must optimize algorithms and hardware to mitigate these challenges. Addressing memory limitations ensures better AI performance and reliability. By understanding and managing these constraints, AI systems can operate more effectively and provide accurate results.

Introduction To Memory Limitations

Artificial Intelligence (AI) systems are powerful. They can solve complex problems. But, AI has memory limitations. These limitations affect its performance. Understanding these constraints is crucial for better AI systems.

Ai And Memory Constraints

AI processes large amounts of data. This requires significant memory. Limited memory can slow down AI. This impacts how well it performs tasks.

Below is a table summarizing some key points:

| Aspect | Impact |

|---|---|

| Data Processing | Slower with limited memory |

| Task Performance | Reduced efficiency |

| Complex Algorithms | Memory-intensive |

Impact On Performance

Memory constraints can affect AI performance in several ways:

- Speed: AI becomes slower.

- Accuracy: Reduced memory can affect accuracy.

- Complexity: Complex tasks need more memory.

Optimizing memory usage is vital. This helps improve AI performance. Developers should focus on memory-efficient algorithms.

Here’s a code snippet showing memory optimization in Python:

import numpy as np

# Optimizing memory usage with numpy

data = np.array([1, 2, 3, 4], dtype=np.int32)

print(data)

Types Of Memory In Ai Systems

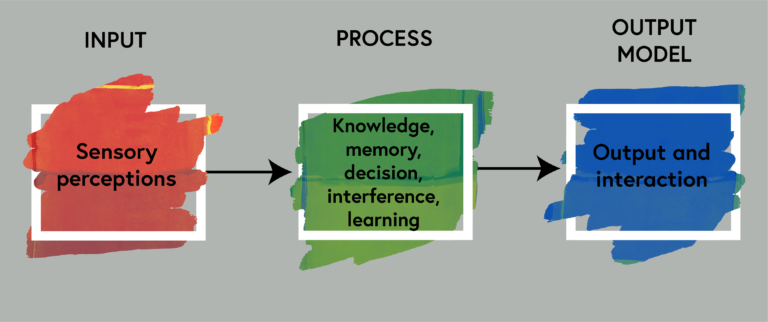

Artificial Intelligence systems have different types of memory. These memory types help AI process information and make decisions. Two primary types of memory in AI systems are short-term memory and long-term memory.

Short-term Memory

Short-term memory in AI is like a temporary workspace. It holds information for a brief period. This memory type is essential for tasks that need quick, immediate responses. For example, chatbots use short-term memory to understand and respond to user queries.

- Temporary storage

- Quick access

- Handles immediate tasks

Short-term memory is crucial for making real-time decisions. It enables AI to process ongoing data streams effectively. This memory type is often limited in capacity. It can only hold a small amount of information at a time.

| Features | Description |

|---|---|

| Duration | Short-lived |

| Capacity | Limited |

| Use Case | Real-time decisions |

Long-term Memory

Long-term memory in AI stores information for extended periods. This memory type is vital for learning and adaptation. AI systems use long-term memory to retain knowledge and experiences.

- Permanent storage

- Large capacity

- Supports learning

Long-term memory helps AI improve over time. It allows AI systems to remember past interactions and outcomes. This memory type is more complex but highly beneficial.

AI systems use long-term memory for tasks requiring deep learning. Examples include image recognition and natural language processing. Long-term memory enhances the AI’s ability to learn from data and improve its performance.

| Features | Description |

|---|---|

| Duration | Long-lasting |

| Capacity | Extensive |

| Use Case | Deep learning |

Challenges In Memory Management

Managing memory in artificial intelligence (AI) systems is a complex task. Memory limitations can lead to significant errors and inefficiencies. This section explores the primary challenges in memory management within AI.

Data Overload

AI systems process vast amounts of data daily. This can lead to data overload, causing systems to slow down or crash. AI needs to handle this data efficiently to function well.

Here are some common sources of data overload:

- Large Datasets: AI often analyzes huge datasets, like images or text.

- Continuous Data Streams: Real-time data from sensors or user interactions.

- Complex Algorithms: Advanced algorithms that require significant memory.

To manage data overload, AI systems use techniques like data compression and filtering. These methods help reduce the amount of data that needs to be processed at once.

Scalability Issues

Scalability is a major concern in AI memory management. Systems must scale efficiently to handle increasing data and tasks. Poor scalability can lead to memory bottlenecks.

Scalability issues often arise from:

- Hardware Limitations: Limited memory capacity and speed.

- Software Constraints: Inadequate algorithms for memory management.

- Resource Allocation: Inefficient distribution of memory resources.

Improving scalability involves optimizing both hardware and software. Solutions include upgrading hardware and refining memory management algorithms.

To summarize, AI faces significant challenges in memory management. Addressing data overload and scalability issues is crucial for efficient AI performance.

Error Sources In Ai Memory

Artificial Intelligence systems often face challenges with memory errors. These errors can significantly impact the performance of AI models. Understanding the sources of these errors is crucial for developing more efficient AI systems.

Overfitting

Overfitting occurs when an AI model learns the training data too well. This means the model performs excellently on the training data but poorly on new, unseen data. Overfitting indicates the model has memorized the data, not generalized from it.

- Symptoms: High accuracy on training data, low accuracy on test data.

- Cause: Too many parameters or too complex models.

To combat overfitting, data augmentation or regularization techniques can be used. These methods help the model generalize better and avoid memorizing the noise in the training data.

Underfitting

Underfitting happens when an AI model fails to capture the underlying pattern of the data. The model performs poorly on both training and test data. This indicates the model is too simple to understand the data complexities.

- Symptoms: Low accuracy on both training and test data.

- Cause: Too few parameters or overly simplistic models.

To prevent underfitting, increasing model complexity or adding more features can be helpful. This allows the model to learn more from the data, improving its performance.

| Condition | Symptoms | Cause | Solution |

|---|---|---|---|

| Overfitting | High training accuracy, low test accuracy | Too many parameters | Data augmentation, regularization |

| Underfitting | Low accuracy on both sets | Too few parameters | Increase complexity, add features |

Strategies For Memory Optimization

Memory limitations can cause errors in artificial intelligence systems. Optimizing memory use can improve performance. This involves efficient data storage and smart memory allocation techniques.

Efficient Data Storage

Efficient data storage is vital for optimizing memory. Use data compression to save space. Remove duplicate data entries. Store only essential data. This reduces memory use.

- Data Compression: Reduces file size.

- Deduplication: Removes repeated data.

- Essential Data: Stores only needed information.

Memory Allocation Techniques

Smart memory allocation helps to avoid errors. Divide memory into smaller chunks. Assign only the needed amount. Reuse memory when possible. These techniques improve memory use.

| Technique | Benefit |

|---|---|

| Memory Pooling | Reuses memory blocks. |

| Dynamic Allocation | Allocates memory at runtime. |

| Memory Segmentation | Divides memory into segments. |

Combining these strategies can prevent memory errors. This ensures better performance of AI systems.

Advancements In Memory Technology

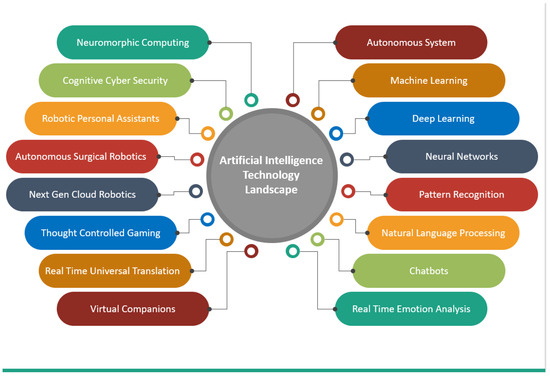

Memory limitations in Artificial Intelligence (AI) are a major challenge. Advancements in memory technology are helping overcome these barriers. This section delves into groundbreaking developments. These innovations promise to redefine AI’s capabilities.

Neuromorphic Computing

Neuromorphic computing mimics the human brain. It uses artificial neurons and synapses. This design improves memory efficiency. Neuromorphic chips can process information faster. They also consume less power. This makes them ideal for AI applications. Such advancements can revolutionize AI systems. They enable more complex tasks and better learning algorithms.

Quantum Memory

Quantum memory offers unprecedented storage capabilities. It uses quantum bits or qubits. Qubits can store more information than traditional bits. Quantum memory can handle vast amounts of data. This technology enhances AI’s processing power. Quantum memory also enables faster data retrieval. This speed is crucial for real-time AI applications. Quantum advancements can significantly reduce AI errors.

| Technology | Key Features | Benefits for AI |

|---|---|---|

| Neuromorphic Computing | Artificial neurons and synapses | Improved efficiency and speed |

| Quantum Memory | Qubits for storage | Enhanced processing power |

These advancements promise a bright future for AI. They can help overcome memory limitations. AI systems will become more efficient and powerful.

Role Of Algorithms In Memory Efficiency

Memory limitations in AI systems can hinder performance. Efficient algorithms help overcome these constraints. They optimize memory usage and improve AI operations. This section explores key algorithms enhancing memory efficiency.

Compression Algorithms

Compression algorithms reduce the size of data. This saves memory and storage space. They work by encoding data more efficiently. Two main types of compression exist: lossless and lossy.

Lossless compression retains all original data. No information is lost. Examples include ZIP and PNG formats. These are crucial for applications needing exact data recovery.

Lossy compression reduces data size by removing some information. JPEG images and MP3 audio files use this method. It’s useful where perfect fidelity is unnecessary.

| Compression Type | Example | Use Case |

|---|---|---|

| Lossless | PNG | Images with text |

| Lossy | JPEG | Photographs |

Sparse Representations

Sparse representations use fewer memory resources. They store only essential information. This method is effective in high-dimensional spaces. It helps reduce the amount of stored data.

Sparse coding is a popular technique. It represents data using a few non-zero elements. This approach is widely used in image and signal processing.

Benefits of Sparse Representations:

- Reduces memory usage

- Speeds up data processing

- Improves efficiency in high-dimensional tasks

Training Techniques For Memory Improvement

Artificial Intelligence (AI) often faces memory limitations during tasks. Improving memory capabilities is crucial for better performance. Training techniques can help AI systems remember more and function effectively. This section will discuss two key techniques: Transfer Learning and Reinforcement Learning.

Transfer Learning

Transfer Learning allows AI to use knowledge from one task in another. This technique helps AI systems learn faster and better. Here’s how it works:

- AI learns a task with a large dataset.

- The knowledge from this task is transferred to a new, related task.

- This reduces the need for large amounts of new data.

Benefits of Transfer Learning:

- Saves time: Less data is needed for new tasks.

- Improves accuracy: Knowledge from previous tasks helps improve results.

- Reduces costs: Fewer resources are needed for new data collection.

Reinforcement Learning

Reinforcement Learning is another powerful technique. It trains AI through rewards and penalties. The AI learns by interacting with its environment and receiving feedback. Here’s a simple process:

- AI performs an action in an environment.

- The environment provides feedback (reward or penalty).

- AI adjusts its actions based on the feedback.

Benefits of Reinforcement Learning:

- Adaptive learning: AI adapts to new situations quickly.

- Improves decision-making: Constant feedback helps refine actions.

- Handles complex tasks: Effective for tasks with many variables.

| Technique | Key Features | Benefits |

|---|---|---|

| Transfer Learning | Uses knowledge from one task for another | Saves time, improves accuracy, reduces costs |

| Reinforcement Learning | Trains through rewards and penalties | Adaptive learning, improves decision-making, handles complex tasks |

Case Studies On Memory Challenges

Memory limitations present significant challenges in artificial intelligence. Understanding these challenges through real-world case studies helps us learn and improve. This section explores various applications and lessons learned from memory issues in AI.

Real-world Applications

AI systems face memory challenges in various domains. Let’s look at some real-world applications:

| Application | Memory Challenge | Impact |

|---|---|---|

| Self-Driving Cars | Storing vast amounts of sensor data | Slower decision-making |

| Healthcare Diagnostics | Handling large medical datasets | Delayed diagnosis |

| Natural Language Processing | Managing extensive language models | Reduced accuracy |

Lessons Learned

From these applications, we learn important lessons:

- Optimize Data Storage: Compress data to save memory.

- Efficient Algorithms: Use algorithms that require less memory.

- Hardware Solutions: Invest in better memory hardware.

By applying these lessons, AI systems can handle memory limitations better. This leads to improved performance and reliability in real-world scenarios.

Ethical Considerations

Artificial Intelligence (AI) has revolutionized many aspects of our lives. But, it has its own set of challenges and limitations. One critical area is memory limitations. These limitations raise important ethical questions. Let’s explore two key aspects: data privacy and bias in memory allocation.

Data Privacy

One major ethical consideration is data privacy. AI systems store and process vast amounts of data. This data often contains personal information. Keeping this data safe is a big concern.

There are several ways to address this issue:

- Use strong encryption methods

- Limit data access to authorized users

- Regularly audit data usage

Here is a simple table showing recommended actions:

| Action | Description |

|---|---|

| Encryption | Convert data into a secure format |

| Access Control | Limit who can view or modify data |

| Auditing | Regular checks on data use |

Bias In Memory Allocation

Another ethical issue is bias in memory allocation. AI systems can be biased in how they store and use information. This can lead to unfair outcomes.

To reduce bias, consider the following steps:

- Ensure diverse data sets

- Regularly test for bias

- Update algorithms to be fair

Here are some key points:

- Diverse data sets help in balanced decision-making

- Bias testing ensures fairness

- Algorithm updates keep the system fair

By addressing these ethical considerations, we can create better AI systems. These systems will be more secure, fair, and trustworthy.

Future Directions In Ai Memory

Artificial Intelligence (AI) is advancing rapidly. Memory limitations in AI systems are a significant challenge. Addressing these limitations opens new possibilities for AI development. This section explores the future directions in AI memory.

Emerging Trends

AI memory systems are evolving. New trends include the use of neuromorphic computing. This approach mimics the human brain. It improves memory efficiency and capacity.

Another trend is quantum computing. Quantum computing promises faster data processing. It will revolutionize AI memory capabilities.

Cloud-based AI memory solutions are also gaining traction. They offer scalable and flexible memory resources. This is essential for handling large datasets.

Research Opportunities

AI memory research has several promising areas. Enhancing neural network architectures is one such area. Improved architectures can optimize memory usage.

Another opportunity is in memory-augmented neural networks (MANNs). MANNs combine neural networks with external memory. This allows for better memory management.

Research into energy-efficient memory systems is crucial. These systems reduce power consumption and extend device lifespan.

| Research Area | Focus |

|---|---|

| Neuromorphic Computing | Brain-like memory efficiency |

| Quantum Computing | Faster data processing |

| Memory-Augmented Neural Networks | Better memory management |

| Energy-Efficient Memory Systems | Reduced power consumption |

- Neuromorphic computing mimics the human brain.

- Quantum computing offers faster data processing.

- Cloud-based solutions provide scalable memory resources.

These trends and research opportunities are shaping the future of AI memory. They address current limitations and pave the way for more advanced AI systems.

Collaborative Efforts In Overcoming Memory Limits

Memory limitations in artificial intelligence can hinder progress. Collaborative efforts are essential to overcome these challenges. Industry partnerships and academic research play key roles.

Industry Partnerships

Industry partnerships bring together diverse expertise. Tech giants often collaborate to solve memory issues. For example, companies like NVIDIA and Google work on advanced AI models.

| Company | Contribution |

|---|---|

| NVIDIA | Provides advanced GPUs |

| Develops efficient algorithms |

Small startups also contribute significantly. They bring innovative solutions and fresh perspectives. These collaborations speed up advancements in AI memory management.

Academic Research

Academic research is vital in overcoming memory limits. Universities and research institutes conduct groundbreaking studies. These studies often lead to significant breakthroughs.

- MIT: Works on memory-efficient neural networks.

- Stanford: Focuses on new data storage techniques.

Collaborations between academia and industry are common. They combine theoretical insights with practical applications. This synergy fosters rapid innovation in AI memory solutions.

Impact Of Memory On Ai Decision Making

Memory limitations in AI impact how decisions are made. AI systems rely on memory to store and process data. Limited memory affects prediction accuracy and model reliability. This section dives into these impacts.

Accuracy Of Predictions

AI needs memory to analyze data. Limited memory can cause errors in predictions. AI might miss important details. This reduces the accuracy of results.

Memory constraints can affect data patterns. Inconsistent patterns lead to inaccurate outcomes. AI systems struggle to make correct predictions.

| Memory Size | Prediction Accuracy |

|---|---|

| Small | Low |

| Medium | Moderate |

| Large | High |

Reliability Of Models

Memory issues affect model reliability. Inconsistent memory can make models unstable. Models need stable memory to function well.

AI models depend on past data for training. Insufficient memory can lose valuable data. This impacts the model’s learning process.

- Memory stability is crucial

- Data retention improves model reliability

- Memory size affects learning efficiency

AI needs adequate memory for reliable models. Limited memory can disrupt learning and decision-making.

Frequently Asked Questions

What Are The Limitations Of Limited Memory Ai?

Limited memory AI has several limitations. It struggles with long-term data retention, lacks deep understanding, and requires significant computational resources. It also can’t generalize well across different tasks and often needs frequent updates.

Why Does Ai Struggle With Memory?

AI struggles with memory due to limited context retention and processing power. It lacks the ability to recall past interactions.

What Are The Limitations Of Ai Intelligence?

AI intelligence lacks emotional understanding, creativity, and common sense. It can struggle with context and nuance. Bias in data can affect outcomes. AI requires significant computational resources. It cannot replace human judgment and decision-making completely.

What Are Examples Of Limited Memory Ai?

Examples of limited memory AI include self-driving cars, chatbots, and recommendation systems. These AIs learn from historical data to improve decisions.

What Causes Memory Limitations In Ai?

Memory limitations in AI are caused by hardware constraints and inefficient data management techniques.

How Do Memory Limitations Affect Ai Performance?

Memory limitations can slow down processing speeds and reduce the accuracy of AI models.

Can Ai Memory Limitations Be Overcome?

Yes, by optimizing algorithms, using cloud storage, and improving hardware capabilities.

Why Is Memory Important For Ai?

Memory is crucial for storing and processing large datasets required for training AI models.

How Do Ai Algorithms Handle Memory Constraints?

AI algorithms use techniques like data compression and incremental learning to manage limited memory.

What Are The Signs Of Ai Memory Limitations?

Common signs include slower processing, frequent crashes, and reduced model accuracy.

Conclusion

Understanding memory limitations in AI is crucial. Addressing these challenges can enhance AI performance and reliability. As technology evolves, ongoing research will help mitigate these errors. By staying informed, we can better harness AI’s potential. This ensures more efficient and accurate applications in various fields.

Keep exploring and learning about AI advancements.

Leave a Reply